The Rise of Experts: Why Domain-Specific AI Models Are Winning the Enterprise War

On This Page

The corporate boardroom is undergoing a fundamental shift. The initial excitement of “AI adoption” has been replaced by a rigorous demand for return on investment (ROI) and total reliability. A recurring theme has emerged in recent industry panels: the “Generalist” Large Language Models (LLMs) that dominated the early narrative are being relegated to creative brainstorming and basic drafting. For mission-critical work, the focus has turned to domain-specific AI models.

The reason for this shift is simple: expertise requires more than just a large dataset; it requires a deep, nuanced understanding of specific workflows, terminologies, and regulatory constraints. Here is why specialized intelligence is winning the battle for the modern enterprise.

The Accuracy Gap: Precision Over Generalization

The primary weakness of general-purpose AI has always been its tendency to provide “plausible-sounding” but factually incorrect information. In a casual chat, this is a minor annoyance; in a legal brief or a medical diagnosis, it is catastrophic.

Domain-specific AI models are trained on high-fidelity, curated datasets specific to a single field. For instance, while a general model might understand the broad concept of a “contract,” a legal-specific model has ingested millions of pages of case law, procedural requirements, and specific internal corporate culture. These models don’t just guess the next word; they understand the underlying logic of the domain, leading to a dramatic reduction in errors compared to generalist counterparts. When the goal is accuracy rather than creative flair, the specialist wins every time.

The Economics of Efficiency: Smaller is Faster

In the early days of the AI boom, companies spent massive amounts on tokens to run 175-billion parameter models for relatively simple tasks. Today, the era of “compute waste” is ending. By using domain-specific AI models, which are often built as Small Language Models (SLMs), enterprises are seeing significant reductions in operational costs.

A 7-billion parameter model fine-tuned for financial risk assessment can outperform a general 1-trillion parameter model while costing a fraction of the price to run. These specialized models require less memory and fewer GPUs, allowing companies to run them on-premise or even on edge devices. This bypasses expensive cloud APIs and reduces latency to sub-second levels, making real-time AI assistance a practical reality rather than a laggy experiment.

Industry Sovereignty: Speaking the Language

Every industry has its own “native dialect.” Medicine has complex Latinate terminology and intricate drug interactions; finance has SEC filings and volatile market dynamics that change by the millisecond. General AI models often flatten these nuances, providing “plain English” summaries that miss the technical depth required by a professional user.

The success of specialized models in the financial sector has proven that a system trained on forty years of financial data has a depth of understanding that a general web-scraped model cannot replicate. We are now seeing this across every sector: medical models have become the standard for clinical assistance, and engineering-focused engines are now the primary tools for system-level programming and debugging.

Privacy and Compliance as a Feature

One of the biggest hurdles for general AI in the enterprise has been the “Black Box” problem. Sending sensitive data to a massive cloud-hosted model raised red flags for data privacy regulations like GDPR and HIPAA.

Domain-specific AI models offer a solution through their “compactness.” Because they are smaller and more efficient, they can be deployed entirely within a company’s secure firewall. This “Sovereign AI” approach ensures that proprietary data never leaves the building. For the first time, highly regulated industries like banking and government can fully embrace AI without fearing a data leak or a third-party security breach.

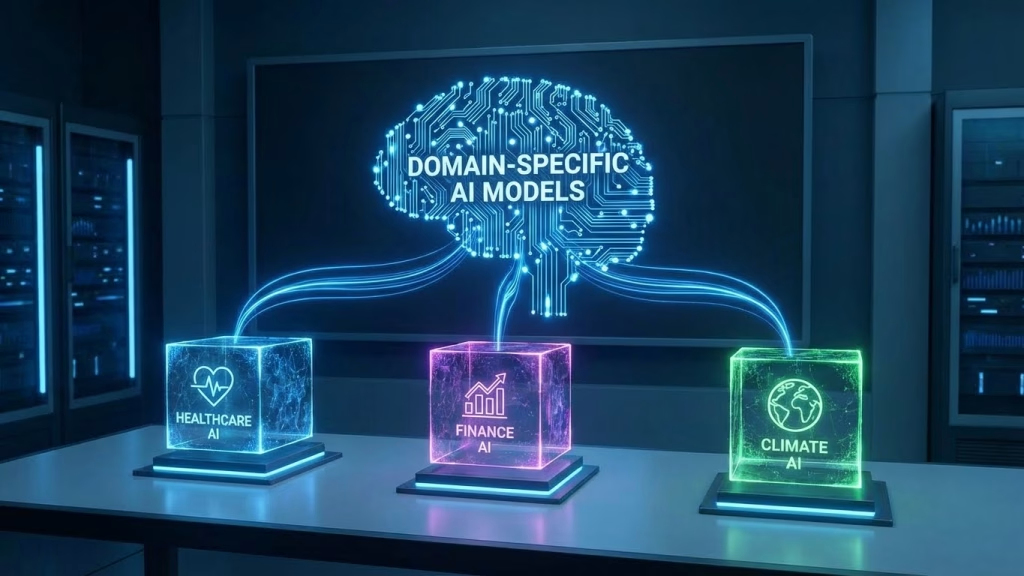

The Rise of the “Model Router”

The most interesting trend currently emerging is that we are no longer relying on one single AI. Instead, organizations are utilizing “Model Routers”—AI agents that analyze a user’s request and “route” it to the most qualified domain-specific AI models.

If you ask a question about your tax return, the router sends it to a finance-tuned model. If you then ask for a summary of a patent, it hands the conversation off to a legal-specific model. This “ensemble” approach provides the best of both worlds: the broad interface of a general agent with the laser-focused expertise of a specialist. It ensures that every task is handled by the “expert” best suited for the job.

The Future Belongs to the Specialist

The transition to specialized intelligence is a sign that the AI industry is maturing. We have moved past the “magic trick” phase and into the “utility” phase. Being “good at everything” is no longer a competitive advantage for an AI system. To survive in the modern enterprise, a model must be an expert in its field.

The future is not a single, all-knowing “God-like” AI. It is a diverse ecosystem of domain-specific AI models working in concert, providing the precision, safety, and efficiency that professionals and industries now demand.